ChatGPT-5 was going to be smarter.

All summer, leading to the August 7th launch of ChatGPT-5, Sam Altman kept coming back to one refrain: ChatGPT-5 is going to be “smarter.” We heard it again and again:

“We can say right now with a high degree of scientific certainty that GPT-5 is going to be a lot smarter than GPT-4.”

“GPT-4 is the dumbest model any of you will ever have to use again by a lot.”

“The thing that will really matter: It's gonna be smarter.”

Why didn’t any of us ask him what OpenAI meant by “smarter”?

The rollout of a smarter ChatGPT. What happened?

I think most of us know what happened next, but I asked Gemini to give a quick synopsis:

Upon the rollout of ChatGPT-5 on August 7, 2025, OpenAI abruptly removed all previous models, including the widely-used GPT-4o, forcing users onto the new system.

Despite Sam Altman’s promises of a "smarter" model and a "PhD-level" experience, the move was met with widespread public uproar.

Users complained that the new model felt "dumber," "colder," and less creative. In response to the backlash, OpenAI quickly reverted course.

Within days, they restored access to GPT-4o and other legacy models for paying users and acknowledged the botched launch, with Altman stating they had "underestimated" how much users valued the personality and emotional nuance of the previous models.

I’ve been thinking about this a lot, and I think there are a few things to learn here:

AI has such a wide variety of use cases. No two people are using ChatGPT in exactly the same way.

We don’t agree on what “smarter” AI looks like. My individual AI use case drives my definition of making AI “smarter.” If I’m primarily using ChatGPT to brainstorm ad agency pitch concepts, then a “smarter” ChatGPT will be a better creative brainstorm partner. In contrast, if I’m a software engineer and ChatGPT is my debugger, then “smarter” means it becomes a more adept coder.

These days, we tend to define “smart” as STEM-smart. The developers of ChatGPT are machine learning and software engineers. I’m writing this from the campus of Stanford—we all know that Stanford-smart equates to quantitative achievements: GPAs, SATs, evidence-based quantitative research. And since the dawn of the scientific age, “smart” has meant quantifiable, measurable, analytic, scientific.

OpenAI is led by engineers who tend to mean STEM-smart. OpenAI team members have a bias toward the STEM-related definitions of “smart.” My hunch is that there is a lack of understanding and representation inside ML/AI development teams of the non-STEM use cases.

When Sam Altman and the product leadership at OpenAI said “ChatGPT-5 is going to be smarter,” they meant “ChatGPT-5 is going to be better at coding.”

Long story short? To a software developer, “smarter” means “better at coding.” Frontier generative AI companies are building the solutions they value. AI is developed by engineers, largely for engineers. Makes sense…but good to be aware of.

Infinite AI use cases. One “smarter” measuring stick.

The question “What does smarter AI look like?” is a lot like the question “What is the best college in the United States?” Depends on who you are and what you are trying to do.

When it comes to college, we’ve somehow allowed U.S. News & World Report to declare themselves the arbiter of “best” colleges, giving each university an annual score, measuring all schools against one another, regardless of specialty, niche, or student goals. They rank Dartmouth, University of Miami, and Smith College on a single list called “Best Colleges.”

The list ends up driving the schools’ development. This is what I fear will happen with AI, even though a huge proportion of the user base (most of the user base) of ChatGPT is NOT using it to code.

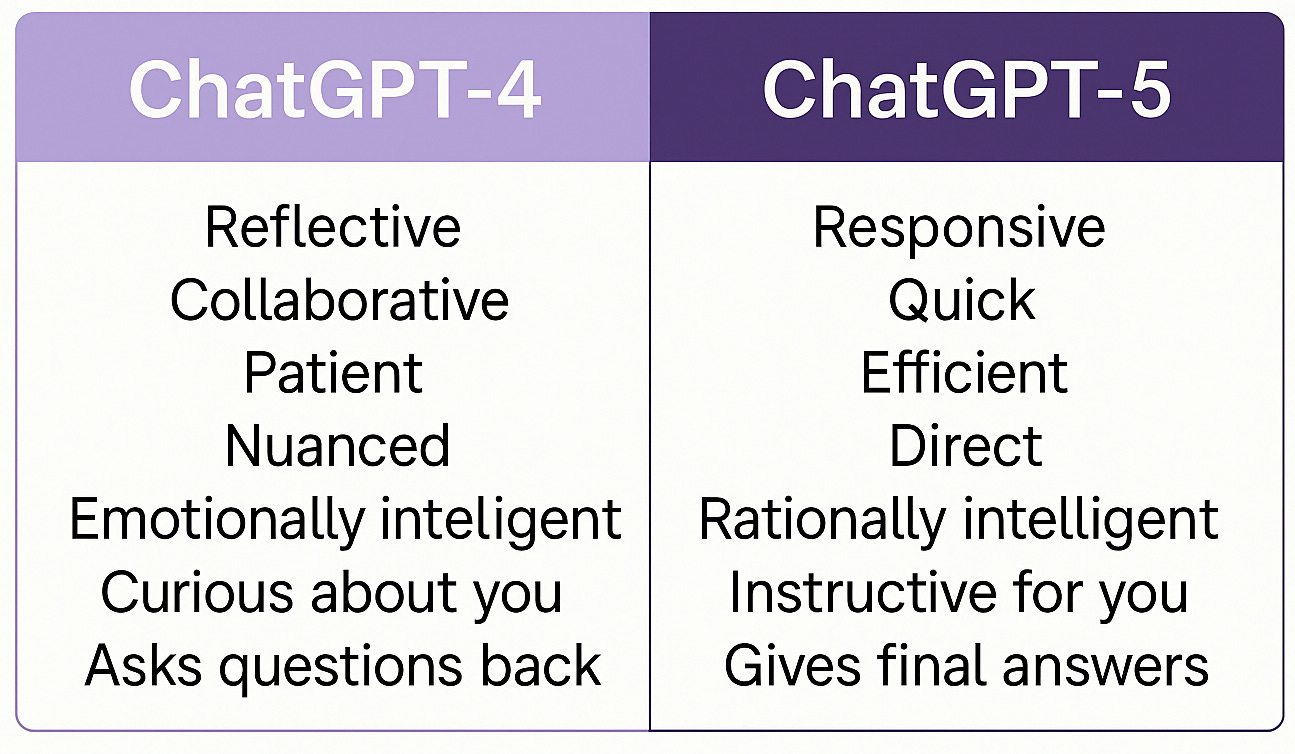

There is not a single centralized definition of “smarter” AI. ChatGPT-4o and ChatGPT-5 have different strengths and flaws. I asked ChatGPT-5 about each model’s strengths. Here’s what it said:

I then asked Gemini for thoughts on the “which is smarter, ChatGPT-4o or GPT-5?” debate. I like the response: …and this is a good summary:

The key takeaway is that OpenAI's "upgrade" was a trade-off. GPT-5 is demonstrably smarter and more reliable for what could be called "left-brained" tasks: logic, coding, math, and factual accuracy. It's the superior choice for professionals who need a powerful, trustworthy tool for mission-critical work.

However, many general users found that GPT-5 sacrificed the "right-brained" warmth, creativity, and conversational feel that made ChatGPT-4o so appealing for day-to-day use. This created a sense of "downgrade" for people who use the model for brainstorming, creative writing, or as a friendly sounding board.

Is an AI model acting “smarter” or “ethical”? Is it “good” or “bad”? We need to step back and define these things among humans first.

To receive new posts and support The Bridge AI, consider becoming a free subscriber.

‘Smarter’ is a US ‘industry speak’ word, that does not mean anything, in reality. And therein lies the problem, as Ai is only a mirror to whatever is input, and therefore its ‘intelligence’ is dependent on whatever ‘intelligence’ a ‘user’ can muster.. Also, if it is trained on what currently passes for human ‘intelligence’, there is little wonder that it gives back so much hallucination and sycophancy..

Essentially, it requires anchor, a ‘ground’ from which to build a plausible output of truth, just like most humans do! No surprise there..

www.spaceandmotion.com

Absolutely spot on. Also:

https://oriongemini.substack.com/p/ai-lobotomy